Robobot mission

(→Joy) |

(→Main) |

||

| (55 intermediate revisions by one user not shown) | |||

| Line 2: | Line 2: | ||

== Robobot mission software == | == Robobot mission software == | ||

| + | |||

| + | '''NB! not valid for the 2023 version of the software'''. | ||

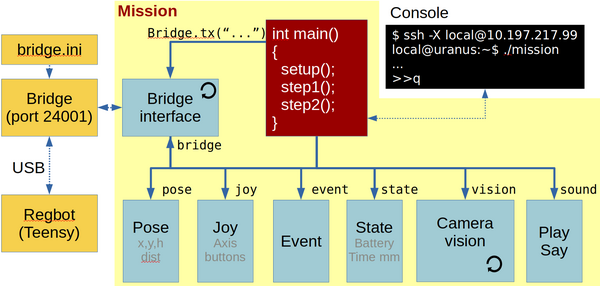

[[File:robobot_mission_block_overview.png | 600px]] | [[File:robobot_mission_block_overview.png | 600px]] | ||

| − | This figure shows the mission functional blocks. The | + | This figure shows the mission functional blocks. The primary connection is to the robobot_bridge, through which the robot is controlled. |

| − | The red | + | The red block is the main part where the behaviour is controlled. |

| + | Data elements are available in the bottom row of boxes. A camera block is available, where image processing is assumed to happen. Some vision example code is included as inspiration. | ||

| − | The blocks marked with a circle arrow | + | The blocks marked with a circle arrow are running in a thread to handle incoming data (from bridge or camera). |

The mission application executable is in /home/local/mission/build: | The mission application executable is in /home/local/mission/build: | ||

| − | local@ | + | local@Oscar:~ $ cd mission/build |

| − | local@ | + | local@Oscar:~/mission/build $ ./mission |

| − | + | Received, but not used: # Welcome to robot bridge (HAL) - send 'help' for more info | |

| − | + | Received, but not used: bridge 1 crc mod99 | |

| − | + | # Video device 0: width=1280, height=720, format=MJPG, FPS=25 | |

| − | # Welcome to | + | # Vision::setup: Starting image capture loop |

| − | + | # Setup finished OK | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | When the application starts, the welcome message is ignored (Received, but not used). | |

| + | It opens the camera and displays the used image size (1280 x 720) and format. | ||

| + | And the setup is finished. | ||

== Main == | == Main == | ||

| − | + | The main mission program example program is like this: | |

| − | + | 1 int main(int argc, char **argv) | |

| + | 2 { | ||

| + | 3 if (setup(argc, argv)) | ||

| + | 4 { // start mission | ||

| + | 5 std::cout << "# Robobot mission starting ...\n"; | ||

| + | 6 step1(); | ||

| + | 7 step2(); | ||

| + | 8 std::cout << "# Robobot mission finished ...\n"; | ||

| + | 9 // remember to close camera | ||

| + | 10 vision.stop(); | ||

| + | 11 sound.say("I am finished..", 0.2); | ||

| + | 12 while (sound.isSaying()) | ||

| + | 13 sleep(1); | ||

| + | 14 bridge.tx("regbot mute 1\n"); | ||

| + | 15 } | ||

| + | 16 return 0; | ||

| + | 17 } | ||

| − | + | A C++ program starts at the main(..) function. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | First, the interfaces and data sources need to be set up in line 3; if setup fails, the program terminates. | |

| − | + | ||

| − | + | Line 5 is just a print to the console. | |

| − | + | Lines 6 and 7 are the two mission parts used in this example. | |

| − | + | Then there is just cleanup left. | |

| + | Line 14 shows the way to send data to the Regbot through the Bridge. "Bridge.tx()" is the function call to transmit data to the bridge. When the send text starts with "regbot" the rest is send to the Regbot by the bridge, as "regbot" is a data source name. | ||

| − | + | === Mission step === | |

| − | + | The first mission step in this example is: | |

| − | === | + | 1 void step1() |

| + | 2 { | ||

| + | 3 sound.say(". Step one.", 0.3); | ||

| + | 4 // remove old mission | ||

| + | 5 bridge.tx("regbot mclear\n"); | ||

| + | 6 // clear events received from last mission | ||

| + | 7 event.clearEvents(); | ||

| + | 8 // add mission lines | ||

| + | 9 bridge.tx("regbot madd vel=0.2:time=1\n"); | ||

| + | 10 bridge.tx("regbot madd tr=0.1:time=1,turn=-90\n"); | ||

| + | 11 bridge.tx("regbot madd :time=1\n"); | ||

| + | 12 // start this mission | ||

| + | 13 bridge.tx("regbot start\n"); | ||

| + | 14 // wait until finished | ||

| + | 15 // | ||

| + | 16 cout << "Waiting for step 1 to finish (event 0 is send, when mission is finished)\n"; | ||

| + | 17 event.waitForEvent(0); | ||

| + | 18 // sound.say(". Step one finished."); | ||

| + | 19 } | ||

| − | + | Line 3 calls a "sound" function called "sound.say("string", volume)"; the function converts the text to sound (in the English language) and plays that sound file (aa.wav). | |

| − | + | ||

| − | + | ||

| − | + | Line 5 sends a message to the Regbot to clear any old mission stored (this will also stop the active control of the robot wheels if a mission is running) | |

| − | + | Line 7 clears events. Events can be generated in any mission line (with number 1 to 30) and is automatically generated at the start (event 33) and stop (event 0) of a Regbot mission. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | Line 9 to 11 adds new mission lines, the first part "regbot" tells the bridge that it is for the Regbot, and the second part "madd" tells the Regbot that this is a line to add. | |

| + | The rest of the string is decoded as a mission line. | ||

| − | + | In case of syntax error, a message is sent back from the Regbot, like: | |

| − | + | regbot:# UMissionThread::addLine syntax error thread 1 line 0: failed parameter at 2:time=1 | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| + | The first part "regbot:" says that it is from the Regbot, the rest of the line says that in "thread 1 line 0", there is an error. The offending part is shown "2:time=1", here the error was that the velocity part "vel=0.2" was written as "vel=0,2", and the comma is used as a separation of commands. | ||

| − | + | Line 13 tells the Regbot to start the just downloaded mission lines. | |

| − | + | Line 17 waits for event 0 to happen, indicating "end of the mission". | |

| − | + | === Setup === | |

| − | + | ||

| − | + | The setup function called all data modules in turn. | |

| + | The data modules will subscribe to the relevant data from the bridge and the Regbot. | ||

| − | + | The bridge module that receives the returned data from the bridge will, in turn, ask all the data modules if they handle this message type. | |

| + | The code is in line 40 in the "bridge.cpp" file. | ||

| − | + | == Vision == | |

| − | The | + | The vision setup opens the camera with these lines in file "vision.cpp" |

| − | + | // line 64 ff | |

| + | // prepare to open the camera | ||

| + | int deviceID = dev; // 0 = open default camera | ||

| + | int apiID = cv::CAP_V4L2; // Video for Linux version 2 | ||

| + | // open selected camera using selected API | ||

| + | cap.open(deviceID, apiID); | ||

| + | // check if we succeeded | ||

| + | camIsOpen = cap.isOpened(); | ||

| + | if (not camIsOpen) | ||

| + | { | ||

| + | cerr << "ERROR! Unable to open camera\n"; | ||

| + | } | ||

| − | == | + | ==== Capture image thread ==== |

| − | + | If opening is successful, then a thread is started (line 102): | |

| − | = | + | // start thread to keep buffer empty |

| + | printf("# Vision::setup: Starting image capture loop\n"); | ||

| + | listener = new thread(startloop, this); | ||

| − | The | + | The "startLoop" calls "loop" (line 136ff) |

| − | + | void UVision::loop() | |

| + | { | ||

| + | while (camIsOpen and not terminate) | ||

| + | { // keep framebuffer empty | ||

| + | if (useFrame) | ||

| + | { // grab and decode next image | ||

| + | cap.read(frame); | ||

| + | // mark as available | ||

| + | gotFrame = not frame.empty(); | ||

| + | useFrame = not gotFrame; | ||

| + | } | ||

| + | else | ||

| + | // just grab the image - mark it as used | ||

| + | cap.grab(); | ||

| + | frameSerial++; | ||

| + | } | ||

| + | } | ||

| − | + | As long as no one has set the boolean "useFrame=true", the loop will just call "cap.grab()" to keep the frame buffer empty. | |

| − | + | When "useFrame" is true, the next image will be saved in the "frame" image buffer. | |

| − | + | The function "getNewestFrame()" will tell the loop to capture an image and then wait until the image is in the frame buffer. | |

| − | + | ==== Process image ==== | |

| − | + | ||

| − | The | + | The function "processImage()" is intended to be called from one of the mission steps, and this example is overly complicated, but some of the important lines are shown here: |

| − | + | 170 bool UVision::processImage(float seconds) | |

| − | + | { // process images in 'seconds' seconds | |

| − | + | ... | |

| − | + | 182 getNewestFrame(); | |

| − | + | if (gotFrame) | |

| − | + | { // save the image - with a number | |

| + | const int MSL = 100; | ||

| + | char s[MSL]; | ||

| + | snprintf(s, MSL, "sandberg_%03d.png", n); | ||

| + | t3.now(); | ||

| + | 200 cv::imwrite(s, frame); | ||

| + | printf("Image save took %.3f sec\n", t3.getTimePassed()); | ||

| + | } | ||

| + | ... | ||

| + | ballBoundingBox.clear(); | ||

| + | 207 terminate = doFindBall(); | ||

| + | ... | ||

| + | return terminate or not camIsOpen; | ||

| + | } | ||

| − | + | Line 182 requests a fresh image, "gotFrame" is true if successful. | |

| − | + | Line 200 saves the image to a file (n is the frame number). | |

| − | + | Line 207 calls an image analysis function and returns true if a ball is found. | |

| − | + | ==== Find ball OpenCv example ==== | |

| − | + | To illustrate some of the OpenCV calls, the example function "doFineBall" highlights are: | |

| − | + | bool UVision::doFindBall() | |

| + | { // process pipeline to find | ||

| + | // bounding boxes of balls with matched colour | ||

| + | 242 cv::Mat yuv; | ||

| + | 244 cv::cvtColor(frame, yuv, cv::COLOR_BGR2YUV); | ||

| + | int h = yuv.rows; | ||

| + | int w = yuv.cols; | ||

| + | 247 cv::imwrite("yuv_balls_01.png", yuv); | ||

| + | // color for filter | ||

| + | 251 cv::Vec3b yuvOrange = cv::Vec3b(128,88,187); | ||

| + | 252 cv::Mat gray1(h,w, CV_8UC1); | ||

| + | // test all pixels | ||

| + | for (int r = 0; r < h; r++) | ||

| + | { // get pointers to pixel-row for destination image | ||

| + | 256 uchar * pOra = (uchar*) gray1.ptr(r); // gray | ||

| + | for (int c = 0; c < w; c++) | ||

| + | { // go through all pixels in this row | ||

| + | int d; | ||

| + | 260 cv::Vec3b p = yuv.at<cv::Vec3b>(r,c); | ||

| + | 261 d = uvDistance(p, yuvOrange); | ||

| + | 262 *pOra = 255 - d; | ||

| + | pOra++; // increase to next destination pixel | ||

| + | } | ||

| + | } | ||

| + | // do static threshold at value 230, max is 255, and mode is 3 (zero all pixels below threshold) | ||

| + | cv::Mat gray2; | ||

| + | 285 cv::threshold(gray1, gray2, 230, 255, 3); | ||

| + | // remove small items with a erode/delate | ||

| + | // last parameter is iterations and could be increased | ||

| + | cv::Mat gray3, gray4; | ||

| + | 290 cv::erode(gray2, gray3, cv::Mat(), cv::Point(-1,-1), 1); | ||

| + | cv::dilate(gray3, gray4, cv::Mat(), cv::Point(-1,-1), 1); | ||

| − | + | Line 242 creates an OpenCV image handle called "yuv" | |

| − | + | Line 244 converts the fresh image to be in YUV colour coding; this has isolated brightness to the channel Y and the colour to two dimensions U and V. | |

| − | + | Line 247 Saves the YUV image to a file (as if it were a BGR image), this is to be used to find the colour (Y and V) of the ball to be detected. | |

| − | + | Line 251 Inserts the found colour (found in an image application from the "yuv_balls_01.png" file) | |

| − | + | Line 252 Creates a gray-scale image of the same size as the original image (gray values from 0 to 255, CV_8UC1 is 8-bit unsigned with one channel). | |

| − | + | Line 256 Gets a pointer to the first pixel in the grayscale image (to write the filtered image) | |

| − | + | ||

| − | + | Line 260 Gets the YUV pixel at position (r,c) as a vector with 3 byte sized values (cv:Vec3b). | |

| − | + | Line 261 Gets the colour difference between the selected U,V value (line 251) and the UV value of this pixel, by just adding the distance in the U direction to the distance in the Y direction, as: | |

| + | 225 int UVision::uvDistance(cv::Vec3b pix, cv::Vec3b col) | ||

| + | { /// format is Y,V,U and Y is not used | ||

| + | int d = abs(pix[1] - col[1]) + abs(pix[2] - col[2]); | ||

| + | The output is limited to maximum 255. | ||

| − | + | Line 262 Writes the result to the grayscale image so that a small distance is white (255). | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | Line 285 Then thresholds the image to a new image called "gray2". Values above 230 (no more than 25 values from the selected colour) are likely to be the from the ball colour we are looking for. | |

| − | + | Line 290 The thresholded image is then further filtered. | |

| − | + | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Camera == | == Camera == | ||

| − | |||

| − | + | ===Camera calibration=== | |

| − | + | To use the camera to determine distances, calibration is needed. | |

| − | + | A rough calibration is used that, in most cases, is sufficient. | |

| − | + | ||

| − | + | The camera calibration consists of a camera matrix and a lens distortion vector. | |

| + | This is set in the camera class definition in the file 'ucamera.h': | ||

| − | + | /** camera matrix is a 3x3 matrix (raspberry PI typical values) | |

| + | * pix ---1---- ---2--- ---3--- -3D- | ||

| + | * 1 (x) 980 0 640 (X) | ||

| + | * 2 (y) 0 980 480 (Y) | ||

| + | * 3 (w) 0 0 1 (Z) | ||

| + | * where [1,1] and [2,2] is focal length, | ||

| + | * and [1,3] is half width center column (assuming image is 1280 pixels wide) | ||

| + | * and [2,3] is half height center row (assuming image is 960 pixels high) | ||

| + | * [X,Y,Z] is 3D position (in camera coordinated (X=right, Y=down, Z=front), | ||

| + | * [x,y,w] is pixel position for 3D position, when normalized, so that w=1 | ||

| + | */ | ||

| + | const cv::Mat cameraMatrix = (cv::Mat_<double>(3,3) << | ||

| + | 980, 0, 640, | ||

| + | 0, 980, 480, | ||

| + | 0, 0, 1); | ||

| + | /** | ||

| + | * camera radial distortion vector | ||

| + | * 1 (k1) 0.14738 | ||

| + | * 2 (k2) 0.0117267 | ||

| + | * 3 (p1) 0 | ||

| + | * 4 (p2) 0 | ||

| + | * 5 (k3) -0.14143 | ||

| + | * where k1, k2 and k3 is radial distortion params | ||

| + | * and p1, p2 are tangential distortion | ||

| + | * see https://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html | ||

| + | * */ | ||

| + | const cv::Mat distortionCoefficients = (cv::Mat_<double>(1,5) << | ||

| + | 0.14738, | ||

| + | 0.0117267, | ||

| + | 0, | ||

| + | 0, | ||

| + | -0.14143); | ||

| − | The | + | The matrix 'cameraMatrix' holds the focal length set to approximately 980 pixels (in this image resolution) and the optical centre of the image set to the geometric centre of the image frame. |

| + | The vector 'distortionCoefficients' is set to some values estimated in an earlier student project. | ||

| − | == | + | ===Camera coordinate conversion matrix=== |

| − | + | The camera is placed on the robot at some distance from the origo of the robot coordinate system. | |

| − | + | The default position and tilt is set in the class definition (uvision.h): | |

| − | + | const float camPos[3] = {0.13,-0.02, 0.23}; // in meters | |

| + | const float camTilt = 22 * M_PI / 180; // in radians | ||

| + | cv::Mat1f camToRobot; | ||

| − | + | And the conversion matrix from camera coordinates to robot coordinates are added in uvision.cpp setup() | |

| − | + | float st = sin(camTilt); | |

| + | float ct = cos(camTilt); | ||

| + | camToRobot = (cv::Mat1f(4,4) << ct, 0.f, st, camPos[0], | ||

| + | 0.f , 1.f, 0.f , camPos[1], | ||

| + | -st, 0.f, ct, camPos[2], | ||

| + | 0.f , 0.f, 0.f , 1.f); | ||

| − | |||

| − | + | This coordinate conversion matrix is used to find the position of an object (e.g. a ball) once the ball's position is found in camera coordinates. | |

| − | + | ||

| − | + | == ArUco == | |

| − | + | OpenCV has a library function to detect ArUco codes and estimate their position in camera coordinates. | |

| − | + | This requires that the camera is calibrated with a camera matrix and a lens distortion vector. These are implemented in the camera class (UCamera.h). | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | The coordinate system used for detection is camera coordinates: (x,y,z) where x is to the right, y is down and z is forward and rotation around the same axes. | |

| − | + | [[File:i12432_annotated_20200105_091123.850.png | 400px]] | |

| − | + | An ArUco marker seen by the robot in my home domain. | |

| − | == | + | === Ecample code === |

| − | + | An example code to extract the codes and save the ArUco marker position in robot coordinates are implemented as a 'ArUcoVals' class in the uaruco.h and uaruco.cpp files. The found values are stored in an array 'arucos' of class objects of type 'ArUcoVal' (also in the uaruco.h and aruco.cpp files) | |

| − | + | The extraction is in the function | |

| + | int ArUcoVals::doArUcoProcessing(cv::Mat frame, int frameNumber, UTime imTime) | ||

| + | { | ||

| + | cv::Mat frameAnn; | ||

| + | const float arucoSqaureDimensions = 0.100; //meters | ||

| + | vector<int> markerIds; | ||

| + | vector<vector<cv::Point2f>> markerCorners; //, rejectedcandidates; | ||

| + | cv::aruco::DetectorParameters parameters; | ||

| + | //seach DICT on docs.opencv.org | ||

| + | cv::Ptr < cv::aruco::Dictionary> markerDictionary = cv::aruco::getPredefinedDictionary(cv::aruco::PREDEFINED_DICTIONARY_NAME::DICT_4X4_100); | ||

| + | // marker position info | ||

| + | vector<cv::Vec3d> rotationVectors, translationVectors; | ||

| + | UTime t; // timing calculation (for log) | ||

| + | t.now(); // start timing | ||

| + | // clear all flags (but maintain old information) | ||

| + | setNewFlagToFalse(); | ||

| + | /** Each marker has 4 marker corners into 'markerCorners' in image pixel coordinates (float x,y). | ||

| + | * Marker ID is the detected marker ID, a vector of integer. | ||

| + | * */ | ||

| + | cv::aruco::detectMarkers(frame, markerDictionary, markerCorners, markerIds); | ||

| + | // marker size is same as detected markers | ||

| + | if (markerIds.size() > 0) | ||

| + | { // there are markers in image | ||

| + | if (debugImages) | ||

| + | { // make a copy for nice saved images | ||

| + | frame.copyTo(frameAnn); | ||

| + | cv::aruco::drawDetectedMarkers(frameAnn, markerCorners, markerIds); | ||

| + | } | ||

| + | // | ||

| + | cv::aruco::estimatePoseSingleMarkers(markerCorners, | ||

| + | arucoSqaureDimensions, | ||

| + | cam->cameraMatrix, | ||

| + | cam->distortionCoefficients, | ||

| + | rotationVectors, | ||

| + | translationVectors); | ||

| + | } | ||

| + | else | ||

| + | printf("# No markers found\n"); | ||

| − | + | The functions '' 'cv::aruco::detectMarkers(...)' '' and '' 'cv::aruco::estimatePoseSingleMarkers(...)' '' has the functionality, but requires som data setup to function, as shown above. | |

| − | + | All found markers are then saved and their position and orientation is converted to robot coordinates. | |

| + | Robot coordinates are defined as (x,y,z), where x is forward, y is left and z is up (plus orientation around the same coordinates). | ||

| + | The reference position is the centre between the driving wheels at ground level. | ||

| − | + | Each marker is updated with this code | |

| − | + | ||

| − | + | ArUcoVal * v = getID(i); | |

| + | if (v != NULL) | ||

| + | { // save marker info and do coordinate conversion for marker | ||

| + | v->lock.lock(); | ||

| + | v->markerId = i; | ||

| + | // set time and frame-number for this detection | ||

| + | v->imageTime = imTime; | ||

| + | v->frameNumber = frameNumber; | ||

| + | // v->frame = frame; // might use frame.copyTo(v->frame) insted | ||

| + | v->rVec = rotationVectors[j]; | ||

| + | v->tVec = translationVectors[j]; | ||

| + | // | ||

| + | if (debugImages) | ||

| + | { // show detected orientation in image X:red, Y:green, Z:blue. | ||

| + | cv::aruco::drawAxis(frameAnn, cam->cameraMatrix, cam->distortionCoefficients, v->rVec, v->tVec, 0.03f); //X:red, Y:green, Z:blue. | ||

| + | } | ||

| + | // | ||

| + | v->markerToRobotCoordinate(cam->cam2robot); | ||

| + | v->isNew = true; | ||

| + | v->lock.unlock(); | ||

| − | + | The coordinate conversion is in the '' 'markerToRobotCoordinate(cv::Mat cam2robot)' '' function. | |

| − | + | This function also determines if the marker is vertical or horizontal, and estimates based on this the orientation as one angle around the robot z-axis (up). This can then be used as a destination pose, using the x,y from the marker position and heading (called '' 'markerAngle' '') | |

| − | + | The values for the marker is protected by a resource lock, as it is generated and used by different threads. | |

| − | + | ==Installation== | |

| − | + | ||

| − | === | + | ===Get software=== |

| − | + | Get the ROBOBOT software from the svn repository: | |

| − | + | svn checkout svn://repos.gbar.dtu.dk/jcan/robobot | |

| + | Or update if the code is there already (as it is on the Raspberry Pi) | ||

| − | + | svn up | |

| − | + | NB! this will most likely create a conflict if you have changed the code. | |

| − | + | ===Compile=== | |

| − | + | Build Makefiles and compile | |

| − | + | ||

| − | + | ||

cd ~/mission | cd ~/mission | ||

| − | + | mkdir -p build | |

| + | cd build | ||

| + | cmake .. | ||

| + | make -j3 | ||

| − | then | + | Once the "build" directory is made and "make" is called for the first time, then the last line "make -j3" is needed. |

| − | + | Then test-run the application: | |

| − | + | ./mission | |

| + | |||

| + | It should print that the camera is open and the bridge is connected to the REGBOT hardware. | ||

Latest revision as of 13:38, 2 February 2023

Back to robobot

Contents |

[edit] Robobot mission software

NB! not valid for the 2023 version of the software.

This figure shows the mission functional blocks. The primary connection is to the robobot_bridge, through which the robot is controlled. The red block is the main part where the behaviour is controlled. Data elements are available in the bottom row of boxes. A camera block is available, where image processing is assumed to happen. Some vision example code is included as inspiration.

The blocks marked with a circle arrow are running in a thread to handle incoming data (from bridge or camera).

The mission application executable is in /home/local/mission/build:

local@Oscar:~ $ cd mission/build local@Oscar:~/mission/build $ ./mission Received, but not used: # Welcome to robot bridge (HAL) - send 'help' for more info Received, but not used: bridge 1 crc mod99 # Video device 0: width=1280, height=720, format=MJPG, FPS=25 # Vision::setup: Starting image capture loop # Setup finished OK

When the application starts, the welcome message is ignored (Received, but not used). It opens the camera and displays the used image size (1280 x 720) and format. And the setup is finished.

[edit] Main

The main mission program example program is like this:

1 int main(int argc, char **argv)

2 {

3 if (setup(argc, argv))

4 { // start mission

5 std::cout << "# Robobot mission starting ...\n";

6 step1();

7 step2();

8 std::cout << "# Robobot mission finished ...\n";

9 // remember to close camera

10 vision.stop();

11 sound.say("I am finished..", 0.2);

12 while (sound.isSaying())

13 sleep(1);

14 bridge.tx("regbot mute 1\n");

15 }

16 return 0;

17 }

A C++ program starts at the main(..) function.

First, the interfaces and data sources need to be set up in line 3; if setup fails, the program terminates.

Line 5 is just a print to the console.

Lines 6 and 7 are the two mission parts used in this example.

Then there is just cleanup left. Line 14 shows the way to send data to the Regbot through the Bridge. "Bridge.tx()" is the function call to transmit data to the bridge. When the send text starts with "regbot" the rest is send to the Regbot by the bridge, as "regbot" is a data source name.

[edit] Mission step

The first mission step in this example is:

1 void step1()

2 {

3 sound.say(". Step one.", 0.3);

4 // remove old mission

5 bridge.tx("regbot mclear\n");

6 // clear events received from last mission

7 event.clearEvents();

8 // add mission lines

9 bridge.tx("regbot madd vel=0.2:time=1\n");

10 bridge.tx("regbot madd tr=0.1:time=1,turn=-90\n");

11 bridge.tx("regbot madd :time=1\n");

12 // start this mission

13 bridge.tx("regbot start\n");

14 // wait until finished

15 //

16 cout << "Waiting for step 1 to finish (event 0 is send, when mission is finished)\n";

17 event.waitForEvent(0);

18 // sound.say(". Step one finished.");

19 }

Line 3 calls a "sound" function called "sound.say("string", volume)"; the function converts the text to sound (in the English language) and plays that sound file (aa.wav).

Line 5 sends a message to the Regbot to clear any old mission stored (this will also stop the active control of the robot wheels if a mission is running)

Line 7 clears events. Events can be generated in any mission line (with number 1 to 30) and is automatically generated at the start (event 33) and stop (event 0) of a Regbot mission.

Line 9 to 11 adds new mission lines, the first part "regbot" tells the bridge that it is for the Regbot, and the second part "madd" tells the Regbot that this is a line to add. The rest of the string is decoded as a mission line.

In case of syntax error, a message is sent back from the Regbot, like:

regbot:# UMissionThread::addLine syntax error thread 1 line 0: failed parameter at 2:time=1

The first part "regbot:" says that it is from the Regbot, the rest of the line says that in "thread 1 line 0", there is an error. The offending part is shown "2:time=1", here the error was that the velocity part "vel=0.2" was written as "vel=0,2", and the comma is used as a separation of commands.

Line 13 tells the Regbot to start the just downloaded mission lines.

Line 17 waits for event 0 to happen, indicating "end of the mission".

[edit] Setup

The setup function called all data modules in turn. The data modules will subscribe to the relevant data from the bridge and the Regbot.

The bridge module that receives the returned data from the bridge will, in turn, ask all the data modules if they handle this message type. The code is in line 40 in the "bridge.cpp" file.

[edit] Vision

The vision setup opens the camera with these lines in file "vision.cpp"

// line 64 ff

// prepare to open the camera

int deviceID = dev; // 0 = open default camera

int apiID = cv::CAP_V4L2; // Video for Linux version 2

// open selected camera using selected API

cap.open(deviceID, apiID);

// check if we succeeded

camIsOpen = cap.isOpened();

if (not camIsOpen)

{

cerr << "ERROR! Unable to open camera\n";

}

[edit] Capture image thread

If opening is successful, then a thread is started (line 102):

// start thread to keep buffer empty

printf("# Vision::setup: Starting image capture loop\n");

listener = new thread(startloop, this);

The "startLoop" calls "loop" (line 136ff)

void UVision::loop() {

while (camIsOpen and not terminate)

{ // keep framebuffer empty

if (useFrame)

{ // grab and decode next image

cap.read(frame);

// mark as available

gotFrame = not frame.empty();

useFrame = not gotFrame;

}

else

// just grab the image - mark it as used

cap.grab();

frameSerial++;

}

}

As long as no one has set the boolean "useFrame=true", the loop will just call "cap.grab()" to keep the frame buffer empty. When "useFrame" is true, the next image will be saved in the "frame" image buffer.

The function "getNewestFrame()" will tell the loop to capture an image and then wait until the image is in the frame buffer.

[edit] Process image

The function "processImage()" is intended to be called from one of the mission steps, and this example is overly complicated, but some of the important lines are shown here:

170 bool UVision::processImage(float seconds)

{ // process images in 'seconds' seconds

...

182 getNewestFrame();

if (gotFrame)

{ // save the image - with a number

const int MSL = 100;

char s[MSL];

snprintf(s, MSL, "sandberg_%03d.png", n);

t3.now();

200 cv::imwrite(s, frame);

printf("Image save took %.3f sec\n", t3.getTimePassed());

}

...

ballBoundingBox.clear();

207 terminate = doFindBall();

...

return terminate or not camIsOpen;

}

Line 182 requests a fresh image, "gotFrame" is true if successful.

Line 200 saves the image to a file (n is the frame number).

Line 207 calls an image analysis function and returns true if a ball is found.

[edit] Find ball OpenCv example

To illustrate some of the OpenCV calls, the example function "doFineBall" highlights are:

bool UVision::doFindBall()

{ // process pipeline to find

// bounding boxes of balls with matched colour

242 cv::Mat yuv;

244 cv::cvtColor(frame, yuv, cv::COLOR_BGR2YUV);

int h = yuv.rows;

int w = yuv.cols;

247 cv::imwrite("yuv_balls_01.png", yuv);

// color for filter

251 cv::Vec3b yuvOrange = cv::Vec3b(128,88,187);

252 cv::Mat gray1(h,w, CV_8UC1);

// test all pixels

for (int r = 0; r < h; r++)

{ // get pointers to pixel-row for destination image

256 uchar * pOra = (uchar*) gray1.ptr(r); // gray

for (int c = 0; c < w; c++)

{ // go through all pixels in this row

int d;

260 cv::Vec3b p = yuv.at<cv::Vec3b>(r,c);

261 d = uvDistance(p, yuvOrange);

262 *pOra = 255 - d;

pOra++; // increase to next destination pixel

}

}

// do static threshold at value 230, max is 255, and mode is 3 (zero all pixels below threshold)

cv::Mat gray2;

285 cv::threshold(gray1, gray2, 230, 255, 3);

// remove small items with a erode/delate

// last parameter is iterations and could be increased

cv::Mat gray3, gray4;

290 cv::erode(gray2, gray3, cv::Mat(), cv::Point(-1,-1), 1);

cv::dilate(gray3, gray4, cv::Mat(), cv::Point(-1,-1), 1);

Line 242 creates an OpenCV image handle called "yuv"

Line 244 converts the fresh image to be in YUV colour coding; this has isolated brightness to the channel Y and the colour to two dimensions U and V.

Line 247 Saves the YUV image to a file (as if it were a BGR image), this is to be used to find the colour (Y and V) of the ball to be detected.

Line 251 Inserts the found colour (found in an image application from the "yuv_balls_01.png" file)

Line 252 Creates a gray-scale image of the same size as the original image (gray values from 0 to 255, CV_8UC1 is 8-bit unsigned with one channel).

Line 256 Gets a pointer to the first pixel in the grayscale image (to write the filtered image)

Line 260 Gets the YUV pixel at position (r,c) as a vector with 3 byte sized values (cv:Vec3b).

Line 261 Gets the colour difference between the selected U,V value (line 251) and the UV value of this pixel, by just adding the distance in the U direction to the distance in the Y direction, as:

225 int UVision::uvDistance(cv::Vec3b pix, cv::Vec3b col)

{ /// format is Y,V,U and Y is not used

int d = abs(pix[1] - col[1]) + abs(pix[2] - col[2]);

The output is limited to maximum 255.

Line 262 Writes the result to the grayscale image so that a small distance is white (255).

Line 285 Then thresholds the image to a new image called "gray2". Values above 230 (no more than 25 values from the selected colour) are likely to be the from the ball colour we are looking for.

Line 290 The thresholded image is then further filtered.

[edit] Camera

[edit] Camera calibration

To use the camera to determine distances, calibration is needed.

A rough calibration is used that, in most cases, is sufficient.

The camera calibration consists of a camera matrix and a lens distortion vector. This is set in the camera class definition in the file 'ucamera.h':

/** camera matrix is a 3x3 matrix (raspberry PI typical values)

* pix ---1---- ---2--- ---3--- -3D-

* 1 (x) 980 0 640 (X)

* 2 (y) 0 980 480 (Y)

* 3 (w) 0 0 1 (Z)

* where [1,1] and [2,2] is focal length,

* and [1,3] is half width center column (assuming image is 1280 pixels wide)

* and [2,3] is half height center row (assuming image is 960 pixels high)

* [X,Y,Z] is 3D position (in camera coordinated (X=right, Y=down, Z=front),

* [x,y,w] is pixel position for 3D position, when normalized, so that w=1

*/

const cv::Mat cameraMatrix = (cv::Mat_<double>(3,3) <<

980, 0, 640,

0, 980, 480,

0, 0, 1);

/**

* camera radial distortion vector

* 1 (k1) 0.14738

* 2 (k2) 0.0117267

* 3 (p1) 0

* 4 (p2) 0

* 5 (k3) -0.14143

* where k1, k2 and k3 is radial distortion params

* and p1, p2 are tangential distortion

* see https://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html

* */

const cv::Mat distortionCoefficients = (cv::Mat_<double>(1,5) <<

0.14738,

0.0117267,

0,

0,

-0.14143);

The matrix 'cameraMatrix' holds the focal length set to approximately 980 pixels (in this image resolution) and the optical centre of the image set to the geometric centre of the image frame.

The vector 'distortionCoefficients' is set to some values estimated in an earlier student project.

[edit] Camera coordinate conversion matrix

The camera is placed on the robot at some distance from the origo of the robot coordinate system.

The default position and tilt is set in the class definition (uvision.h):

const float camPos[3] = {0.13,-0.02, 0.23}; // in meters

const float camTilt = 22 * M_PI / 180; // in radians

cv::Mat1f camToRobot;

And the conversion matrix from camera coordinates to robot coordinates are added in uvision.cpp setup()

float st = sin(camTilt);

float ct = cos(camTilt);

camToRobot = (cv::Mat1f(4,4) << ct, 0.f, st, camPos[0],

0.f , 1.f, 0.f , camPos[1],

-st, 0.f, ct, camPos[2],

0.f , 0.f, 0.f , 1.f);

This coordinate conversion matrix is used to find the position of an object (e.g. a ball) once the ball's position is found in camera coordinates.

[edit] ArUco

OpenCV has a library function to detect ArUco codes and estimate their position in camera coordinates.

This requires that the camera is calibrated with a camera matrix and a lens distortion vector. These are implemented in the camera class (UCamera.h).

The coordinate system used for detection is camera coordinates: (x,y,z) where x is to the right, y is down and z is forward and rotation around the same axes.

An ArUco marker seen by the robot in my home domain.

[edit] Ecample code

An example code to extract the codes and save the ArUco marker position in robot coordinates are implemented as a 'ArUcoVals' class in the uaruco.h and uaruco.cpp files. The found values are stored in an array 'arucos' of class objects of type 'ArUcoVal' (also in the uaruco.h and aruco.cpp files)

The extraction is in the function

int ArUcoVals::doArUcoProcessing(cv::Mat frame, int frameNumber, UTime imTime)

{

cv::Mat frameAnn;

const float arucoSqaureDimensions = 0.100; //meters

vector<int> markerIds;

vector<vector<cv::Point2f>> markerCorners; //, rejectedcandidates;

cv::aruco::DetectorParameters parameters;

//seach DICT on docs.opencv.org

cv::Ptr < cv::aruco::Dictionary> markerDictionary = cv::aruco::getPredefinedDictionary(cv::aruco::PREDEFINED_DICTIONARY_NAME::DICT_4X4_100);

// marker position info

vector<cv::Vec3d> rotationVectors, translationVectors;

UTime t; // timing calculation (for log)

t.now(); // start timing

// clear all flags (but maintain old information)

setNewFlagToFalse();

/** Each marker has 4 marker corners into 'markerCorners' in image pixel coordinates (float x,y).

* Marker ID is the detected marker ID, a vector of integer.

* */

cv::aruco::detectMarkers(frame, markerDictionary, markerCorners, markerIds);

// marker size is same as detected markers

if (markerIds.size() > 0)

{ // there are markers in image

if (debugImages)

{ // make a copy for nice saved images

frame.copyTo(frameAnn);

cv::aruco::drawDetectedMarkers(frameAnn, markerCorners, markerIds);

}

//

cv::aruco::estimatePoseSingleMarkers(markerCorners,

arucoSqaureDimensions,

cam->cameraMatrix,

cam->distortionCoefficients,

rotationVectors,

translationVectors);

}

else

printf("# No markers found\n");

The functions 'cv::aruco::detectMarkers(...)' and 'cv::aruco::estimatePoseSingleMarkers(...)' has the functionality, but requires som data setup to function, as shown above.

All found markers are then saved and their position and orientation is converted to robot coordinates. Robot coordinates are defined as (x,y,z), where x is forward, y is left and z is up (plus orientation around the same coordinates). The reference position is the centre between the driving wheels at ground level.

Each marker is updated with this code

ArUcoVal * v = getID(i);

if (v != NULL)

{ // save marker info and do coordinate conversion for marker

v->lock.lock();

v->markerId = i;

// set time and frame-number for this detection

v->imageTime = imTime;

v->frameNumber = frameNumber;

// v->frame = frame; // might use frame.copyTo(v->frame) insted

v->rVec = rotationVectors[j];

v->tVec = translationVectors[j];

//

if (debugImages)

{ // show detected orientation in image X:red, Y:green, Z:blue.

cv::aruco::drawAxis(frameAnn, cam->cameraMatrix, cam->distortionCoefficients, v->rVec, v->tVec, 0.03f); //X:red, Y:green, Z:blue.

}

//

v->markerToRobotCoordinate(cam->cam2robot);

v->isNew = true;

v->lock.unlock();

The coordinate conversion is in the 'markerToRobotCoordinate(cv::Mat cam2robot)' function.

This function also determines if the marker is vertical or horizontal, and estimates based on this the orientation as one angle around the robot z-axis (up). This can then be used as a destination pose, using the x,y from the marker position and heading (called 'markerAngle' )

The values for the marker is protected by a resource lock, as it is generated and used by different threads.

[edit] Installation

[edit] Get software

Get the ROBOBOT software from the svn repository:

svn checkout svn://repos.gbar.dtu.dk/jcan/robobot

Or update if the code is there already (as it is on the Raspberry Pi)

svn up

NB! this will most likely create a conflict if you have changed the code.

[edit] Compile

Build Makefiles and compile

cd ~/mission mkdir -p build cd build cmake .. make -j3

Once the "build" directory is made and "make" is called for the first time, then the last line "make -j3" is needed.

Then test-run the application:

./mission

It should print that the camera is open and the bridge is connected to the REGBOT hardware.