Robobot mission

(→ArUco) |

(→ArUco) |

||

| Line 483: | Line 483: | ||

[[File:i12432_annotated_20200105_091123.850.png | 400px]] | [[File:i12432_annotated_20200105_091123.850.png | 400px]] | ||

| − | An ArUco marker in my home domain. | + | An ArUco marker seen by the robot in my home domain. |

=== Ecample code === | === Ecample code === | ||

Revision as of 20:37, 18 January 2020

Back to robobot

Contents |

Robobot mission software

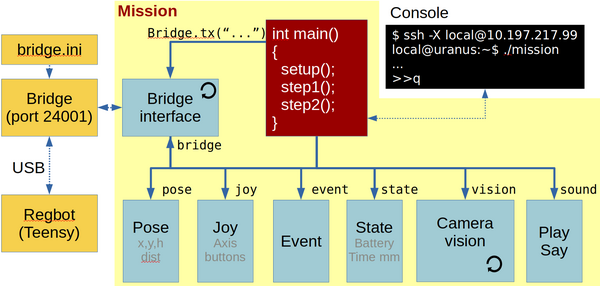

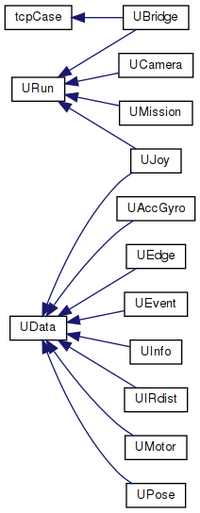

This figure shows the mission functional blocks. The main connection is to the robobot_bridge, through which the robot is controlled. The red mission block is the main block, where the behaviour is controlled. To help decide and control the behaviour, there is a number of data elements available in the bottom row of boxes, available through the bridge. A camera block is available, where image processing is assumed to happen. From the camera block, there is support for ArUco marker detection.

The blocks marked with a circle arrow is running their own thread. The yellow boxes are features available outside the mission application.

The mission application executable is in /home/local/mission/build:

local@solvej:~ $ cd mission/build local@solvej:~/mission/build $ ./mission image size 960x1280 Connecting to camera Connected to pi-camera ='00000000a0604732 # Welcome to REGBOT bridge - send 'help' for more info # press green to start. Press h for help (q for quit) >>

When the application is starting it opens the camera and displays the used image size (960x1280) and the camera serial number 00000000a0604732. Then it connects to the bridge, that reply "# Welcome to REGBOT bridge". The connection to the bridge is strictly needed, otherwise the mission app terminates.

The "# press green to start." is information from the actual mission plan.

There is a prompt at the end ">>" indication that the keyboard is active.

Main

When the mission app is started a number of functions are available from the keyboard.

Press h (and enter) to get a list of features:

>> h # got 'h' n=1 # mission command options # a Do an ArUco analysis of a frame (no debug - faster) # b Bridge restart # c Capture an image and save to disk (image*.png) # d 1/0 Set/clear flag to save ArUco debug images, is=0 # h This help # lo xxx Open log for xxx (pose 0, hbt 0, bridge 0, imu 0 # ir 0, motor 0, joy 0, event 0, cam 0, aruco 0, mission 0) # lc xxx Close log for xxx # o Loop-test for steady ArUco marker (makes logfile) # p 99 Camera pan (Yaw) degrees (positive CCV) is 0.0 deg # q Quit now # r 99 Camera roll degrees (positive left), is 0.0 deg # s Status (all) # t 99 Camera tilt degrees (positive down), is 10.0 deg # 2 x y h d To face destination (x,y,h) at dist d # 3 x y h Camera position on robot (is 0.030, 0.030, 0.270) [m] # # NB! Robot may continue to move if this app is stopped with ctrl-C. >>

Log-files can be opened, this is a nice feature for debugging and analyzing sensor behaviour. e.g. the command

>> lo pose hbt imu ir motor joy event cam aruco mission

will open logfiles for most of the available data - except the bridge, that logs all communication.

The camera pose should be set in "ucamera.h", but can temporarily be changed here with the 'p', 'r', 't' and '3' commands.

The status list 's' gives an actual status for rather many data points, including 'data age', the time since the last update.

In the line starting with '3' is shown current camera position on the robot, relative to the centre between the driving wheels at floor level 3cm more forward, 3cm to the left and 27cm above the ground.

Logfiles

The data blocks have a data logger feature that can be enabled and disabled. The interface logfile will be in a text format for MATLAB import. The name of the log-file will include date and time, and will therefore not overwrite the previous logfile.

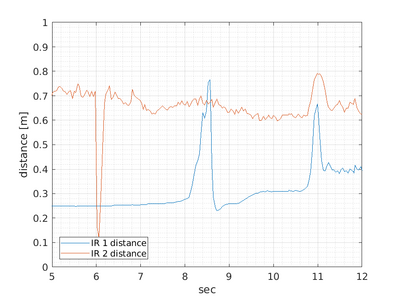

The IR logfile could be named 'log_irdist_20200105_133333.297.txt' look like this:

% robobot mission IR distance log % 1 Timestamp in seconds % 2 IR 1 distance [meter] % 3 IR 2 distance [meter] % 4 IR 1 raw [AD value] % 5 IR 2 raw [AD value] 1578227613.337 0.250 0.730 33549 5864 1578227613.372 0.249 0.740 33649 5687 1578227613.419 0.250 0.759 33476 5330 1578227613.455 0.250 0.716 33468 6147 1578227613.494 0.250 0.727 33470 5936

And can be displayed in MATLAB using a script like this

ir = load('log_irdist_20200105_133333.297.txt'); %

h = figure(300)

hold off

plot(ir(:,1) - ir(1,1), ir(:,2));

hold on

plot(ir(:,1) - ir(1,1), ir(:,3));

grid on

grid MINOR

axis([5,12,0,1])

legend('IR 1 distance', 'IR 2 distance','location','southwest')

xlabel('sec')

ylabel('distance [m]')

saveas(h, 'ir1-ir2.png')

And in this case, the plot shows:

The plot shows that longer distances (IR2) have more noise than shorter distances (IR1). In this case the drive is started by an IR2 distance less than 0.3m (at 6 seconds), hereafter the robot moves and the IR 1 distance (looking right) sees different obstacles.

Bridge

This part handles the interface with the regbot_bridge application. This two-way communication handles each direction individually.

Sending messages is mostly handled by the mission block, here regular updates of data is requested for the data blocks - e.g. robot pose, joystick buttons, IR-sensor measurements etc.

The receiving part of the bridge is always waiting for messages and distribute them for the relevant data block for decoding.

In a normal setup, about 150 messages will be received each second.

Data elements

This is a list of the features of each of the data elements.

IMU

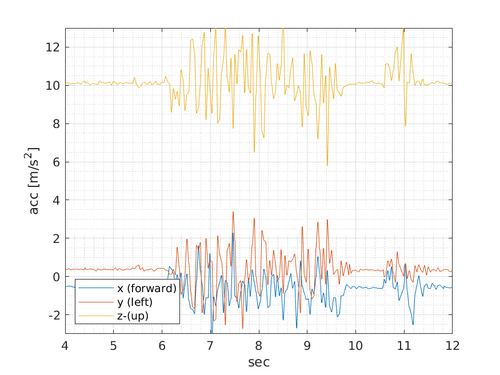

The IMU is an MPU-9150 chip from InvenSense, that provide accelerometer and gyro signals on all 3 axes.

Accelerometer data. The z-axis shows the gravity acceleration, and when the robot starts driving after 6 seconds, there is clearly some bumping, that is seen as noise. The robot holds a pause at about 10 to 10.5 seconds and then turns a bit. The values on the x-axis (forward) ought to show some lateral acceleration, but it is hard to see in the noise.

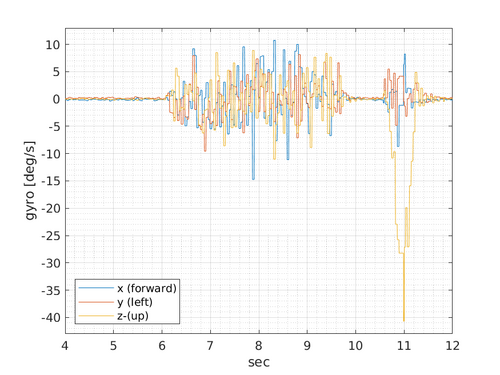

Gyro data. While driving forward (from 6 to 10 seconds) there is a significant amount of noise. The robot holds a pause at about 10 to 10.5 seconds and then turns a bit. The turning (from 10.5 to 11.5 seconds) is clearly visible on the gyro z-axes.

The data is accessible in the mission code as:

bridge->imu->acc[0] Acceleration in x-axes (forward) [m/s^2] bridge->imu->acc[1] y-axis (left) bridge->imu->acc[2] z-axes (up) bridge->imu->gyro[0] Rotation velocity around x-axes [degree/sec] bridge->imu->gyro[1] y-axis bridge->imu->gyro[2] z-axes

There is further a turn rate function (vector sum of all three gyro axes):

bridge->imu->turnrate()

There is no calculated nor calibrated scale not offset on the accelerometer reading.

The gyro offset can be calibrated using the REGBOT GUI (IMU tab).

IR-dist

There are two IR sharp sensors type GP2Y0A21, a 10-80cm sensor.

The raw response from the sensor is approximate as shown here

The part from about 8-10cm is converted to a distance in meter using the calibration points in the REGBOT GUI.

A data example is shown above in the logfiles section.

The data is accessible using

bridge->irdist->dist[0] is distance sensor 1 [m] bridge->irdist->dist[1] is distance sensor 2 [m]

Pose

The robot pose includes position, orientation and tilt and is accessed as

bridge->pose->x distance in x-direction (forward) sinse start of mission. bridge->pose->y y-distance bridge->pose->h heading in radians, zero is aligned with x-axes, positive is counter clockwise bridge->pose->tilt tilt is rotation angle around the y-axis (used for REGBOT balance) (using a complementary filter (acc and gyro). bridge->pose->dist distance driven since start of mission [m].

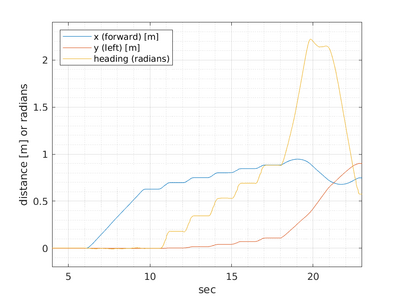

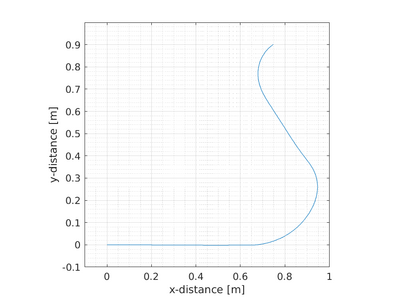

The data from the same mission as above looks like.

The first plot shows that the robot moves 0.6m forward (y=0 and h=0) starting at 6 seconds and stops at 10 seconds - to take an image. After this, it takes a number of turns (about 20 degrees with a turning radius of about 0.2m) with a stop for about a second (looking for an ArUco code in the image).

After 18 seconds and the heading is about 0.9 radians, and an ArUco-code is visible. Thien the robot advances to the position of the code (in a turn-drive-turn manoeuver).

The second plot shows the position of the robot. First about 0.6m forward, then turning in small steps until the marker is seen, from there on a turn with a smaller turn radius, a straight part and a final turn to face the marker.

Calibration of the odometry is the wheel-base (distance between driving wheels) and the wheel radius. These setting must be inserted in the REGBOT GUI (robot tab).

Joy

The joystick data is available from the bridge.

The following data is available

bridge->joy->axes[0] (left hand left-right axes) ... bridge->joy->axis[7] (digital up-down axes)

For the other axes see Robobot_bridge#Gamepad. The value is a signed 16-bit value (-32767 to 32766) for the axes.

bridge->joy->button[0] Green button ... bridge->joy->button[10] Right knob

For the other buttons see Robobot_bridge#Gamepad. The value is 0 (not pressed) and 1 (pressed).

There is further a flag for manual override by the gamepad

bridge->joy->manual

With true or false value (true means gamepad control)

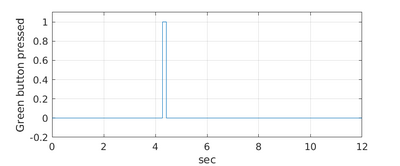

The mission from the other plots gets started with a press on the green button about 4 seconds after the start of the mission application. (the robot then further awaits the distance of IR sensor 2 is below 0.3m before starting to move).

Motor

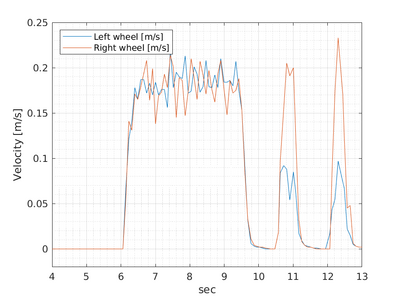

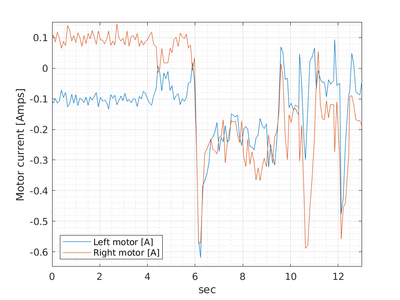

The motor interface gives the (estimated) velocity of the two driving wheels.

bridge->motor->velocity[0] Velocity of the left wheel in m/s bridge->motor->velocity[1] Velocity of right wheel

There is further access to the motor current.

bridge->motor->current[0] Current of the left motor in Amps bridge->motor->current[1] Current of right motor

An example from the mission used earlier is shown. The left plot shows the estimated velocity, where the velocity starts after 6 seconds at about 0.2 m/s and going back to zero at about 10 seconds, and after that starts turning at 10.5 and 12 seconds - the right wheel drives faster. It is visible that there is significant noise on the velocity estimate.

The right current plot shows that the control is active (but not moving) with a current about 100mA. After 6 seconds there is a current peak to about 600mA that starts the motors and then drives with a current of about 250mA. Again after 10.5 and 12 seconds, there is a start-current peak.

Info

The info block holds some static data, but also data from the heartbeat message - the only part that can be logged).

From heartbeat message

bridge->info->regbotTime Time from REGBOT in seconds since "start" message bridge->info->batteryVoltage In Volts bridge->info->missionLineNum Line number in thread (the last linenumber started) bridge->info->missionThread The thread number (where the line number is valid)

Basic robot info that is updated at least once at the start of the mission

bridge->info->robotId The REGBOT robot ID number (decides the name) bridge->info->odoWheelBase The (calibrated) distance between the driving wheels (reproted by REGBOT), bridge->info->gear The gear ratio (reported by REGBOT) bridge->info->pulsPerRev The number of ticks in one motor rotation (reported from REGBOT) bridge->info->odoWheelRadius[0] The radius of left wheel in meters (reported from REGBOT) bridge->info->odoWheelRadius[1] The radius of right wheel in meters bridge->info->robotname A C-string with the robot name bridge->info->msgCnt1sec Number of messages received from bridge in one second.

There is further a function that reports if communication with the robot is OK.

bridge->info->isHeartbeatOK()

Returns true if a heartbeat message is reported within the last 2 seconds. It should be reported about every 0.5 seconds.

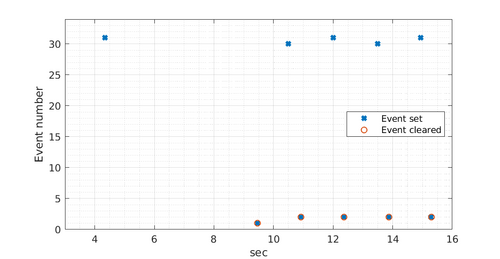

Event

Holds all 34 event flags.

To test if an event has occurred use this function

bridge->event->isEventSet(X); Where X is the event number (between 0 and 33)

This call to isEventSet(x) also clears the event flag, so that the flag is ready to be set again. And thus can be used to clear the event.

The raw event flag can be accessed in the event array:

bridge->event->eventFlags[X]; Where X is a number from 0 to 33.

Two flags have a special meaning: Event 0 stops the mission in REGBOT (and terminates the mission app). To trigger a stop event use the call:

bridge->stop();

To start a mission send the command "start" to the robot:

bridge->send("start\n");

This will trigger an event 33.

Events 30 and 31 are used in the mission app to swap between the two buffer threads and should not be used for anything else.

Events 1 to 29 can be used in mission lines to track progress.

The logfile has a list of when each of them has been set or cleared.

This plot shows the events related to the mission also used above. The first event is 31 at about 5 seconds, this will activate the first thread with drive mission lines. When the drive has reached the last line is sends an event 1 (about 9.5 seconds), this event is regularly tested by the mission app and is cleared almost immediately. It triggers a pause where an image is taken and analyzed.

After a second, the conclusion is clear and new mission lines (called a mission snipped) are sent to the REGBOT and activated with an event 30 (to the REGBOT). When the snippet is finished an event 2 is sent (from the REGBOT). This again triggers new actions in the mission app.

Edge

The edge sensor (also called line sensor) is primarily used by the REGBOT when driving on a line, but some information is available from the edge sensor:

bridge->edge->edgeValidLeft bridge->edge->edgeValidRight bridge->edge->edgeCrossingBlack bridge->edge->edgeCrossingWhite

These (bool variables) will be set (to 1 (true) or 0 (false)) as detected by the line sensor but is updated at a rather slow pace (30-50ms). it is thus likely that a crossing line can be missed (the detection on REGBOT is tested evert 1ms).

'EdgeCrossingBlack' crossing a black line is never really tested, and should not be considered usable. The two variables 'edgeValidLeft' and 'edgeValidRight' are always true and false at the same time (a legacy feature).

Mission

The mission block has access to all the other elements and controls the performance of the robot.

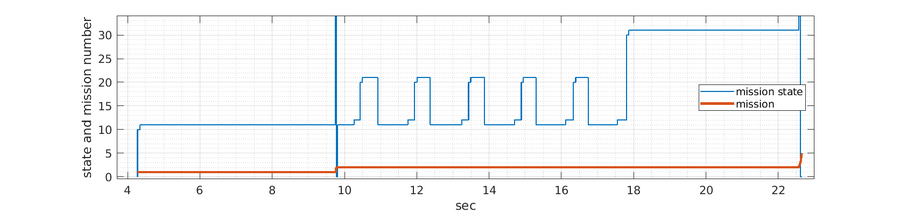

The mission is controlled by two states

- a mission segment - that is intended to do a part of a mission

- a mission state - that control the mission snippets that are sent to the REGBOT for execution.

The mission used in the plots above is divided into two mission segments. The first segment waits for a start signal and drives the first straight part. The second segment do the rest - look for Aruco code and turns a bit if not found and drive to the ArUco marker if found.

The following plot shows the timing of these two states, the mission segment and the mission state.

The mission segment (red curve) start by mission segment 1 after about 6 seconds and switch to mission segment 2 after about 9.5 seconds. The mission state (blue curve) starts at state 0, then fast state 10 and 11, stay at state 11 for a long time (while driving) and ends at state 999 (over the top). Mission segment switches between states 10, 11, 20 and 21 a number of times (turning), and when the marker is found state 30 and 31.

Mission segment loop

The mission state is handled by a function called

runMission()

In this loop, there is also a check for manual or automatic operation and some odd gamepad reactions.

The mission segment loop constantly calls the mission code with the mission state as a parameter.

The mission segment loop code is described here in more detail.

Mission segment code

mission1(state)

- First segment with movement (0.6m at a velocity of 0.2m/s) and is using a gamepad button, event, sound and display on the o-led display.

mission2(state)

- Second segment that waits for the robot to stand still, take an image and do ArUco code analysis. Depending on the code analysis either the robot turns or moves to face the marker. It is using a library to calculate a manoeuvre (a turn, a straight part and an end turn to get to the destination.

mission3(state) ...

- Empty functions that just return true (finished).

The mission code is described here in more detail.

Camera

The camera interface sets the camera to 1280 x 960 pixels resolution at 30 frames per second and RGB format (setupCamera() in 'ucamera.h').

Frame capture thread

After setup a new thread is created that calls the 'run()' function (in the 'ucamera.cpp' file).

The thread captures constantly images from the camera in an attempt not to have the most recent image frame available.

Then it checks if any of the use-flags (saveImage or doArUcoAnalysis) are set, and if so call an appropriate function. The flag will be cleared to indicate that the processing is finished.

Logfile and timing

There is a camera log file that can be used to get an idea of the framerate and processing time of the used frames. This can be opened from the console.

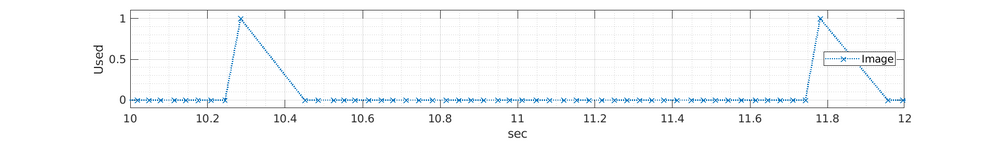

From the example mission, the following data is obtained:

The plot shows with a 'X' when an image frame is captured, and if an ArUco analysis is requested (value 1).

This shows about 33ms between the camera frames in most cases.

When an ArUco marker analysis is performed (at 10.28 seconds) it takes about 170ms before the next frame is captured. This must then be the time taken by the ArUco detection process (no code was found in this case). When a code is detected it took 240ms to process (not shown in the plot).

Camera calibration

To use the camera to determine distances, a calibration is needed.

A rough calibration is used that in most cases is sufficient.

The camera calibration consist of a camera matrix and a lens distortion vector. This is set in in the camera class definition in the file 'ucamera.h':

/** camera matrix is a 3x3 matrix (raspberry PI typical values)

* pix ---1---- ---2--- ---3--- -3D-

* 1 (x) 980 0 640 (X)

* 2 (y) 0 980 480 (Y)

* 3 (w) 0 0 1 (Z)

* where [1,1] and [2,2] is focal length,

* and [1,3] is half width center column (assuming image is 1280 pixels wide)

* and [2,3] is half height center row (assuming image is 960 pixels high)

* [X,Y,Z] is 3D position (in camera coordinated (X=right, Y=down, Z=front),

* [x,y,w] is pixel position for 3D position, when normalized, so that w=1

*/

const cv::Mat cameraMatrix = (cv::Mat_<double>(3,3) <<

980, 0, 640,

0, 980, 480,

0, 0, 1);

/**

* camera radial distortion vector

* 1 (k1) 0.14738

* 2 (k2) 0.0117267

* 3 (p1) 0

* 4 (p2) 0

* 5 (k3) -0.14143

* where k1, k2 and k3 is radial distortion params

* and p1, p2 are tangential distortion

* see https://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html

* */

const cv::Mat distortionCoefficients = (cv::Mat_<double>(1,5) <<

0.14738,

0.0117267,

0,

0,

-0.14143);

The matrix 'cameraMatrix' holds the focal length set to approximately 980 pixels (in this image resolution) and the optical centre of the image set to the geometric centre of the image frame.

The vector 'distortionCoefficients' is set to some values estimated in an earlier student project.

Camera coordinate conversion matrix

The camera is placed on the robot in some distance from the origo of the robot coordinate system.

The default position is set in the class definition (ucamera.h):

// camera position on robot

cv::Vec3d camPos = {0.03, 0.03, 0.27}; /// x=fwd, y=left, z=up

cv::Vec3d camRot = {0, 10*M_PI/180.0, 0}; /// roll, tilt, yaw (right hand rule, radians)

These values can be set with console command while the mission app is running, see Robobot_mission#Main.

But also by the calls

void setTilt(float tilt); void setPan(float pan); void setRoll(float roll); void setPos(float x, float y, float z);

These settings are used in the function 'makeCamToRobotTransformation()' in ucamera.cpp to set the coordinate conversion matrix

cv::Mat cam2robot;

ArUco

OpenCV has a library function to detect ArUco codes and estimate their position in camera coordinates.

This requires that the camera is calibrated with a camera matrix and a lens distortion vector. These are implemented in the camera class (UCamera.h).

The coordinate system used for detection is camera coordinates: (x,y,z) where x is to the right, y is down and z is forward and rotation around the same axes.

An ArUco marker seen by the robot in my home domain.

Ecample code

An example code to extract the codes and save the ArUco marker position in robot coordinates are implemented as a 'ArUcoVals' class in the uaruco.h and uaruco.cpp files. The found values are stored in an array 'arucos' of class objects of type 'ArUcoVal' (also in the uaruco.h and aruco.cpp files)

The extraction is in the function

int ArUcoVals::doArUcoProcessing(cv::Mat frame, int frameNumber, UTime imTime)

{

cv::Mat frameAnn;

const float arucoSqaureDimensions = 0.100; //meters

vector<int> markerIds;

vector<vector<cv::Point2f>> markerCorners; //, rejectedcandidates;

cv::aruco::DetectorParameters parameters;

//seach DICT on docs.opencv.org

cv::Ptr < cv::aruco::Dictionary> markerDictionary = cv::aruco::getPredefinedDictionary(cv::aruco::PREDEFINED_DICTIONARY_NAME::DICT_4X4_100);

// marker position info

vector<cv::Vec3d> rotationVectors, translationVectors;

UTime t; // timing calculation (for log)

t.now(); // start timing

// clear all flags (but maintain old information)

setNewFlagToFalse();

/** Each marker has 4 marker corners into 'markerCorners' in image pixel coordinates (float x,y).

* Marker ID is the detected marker ID, a vector of integer.

* */

cv::aruco::detectMarkers(frame, markerDictionary, markerCorners, markerIds);

// marker size is same as detected markers

if (markerIds.size() > 0)

{ // there are markers in image

if (debugImages)

{ // make a copy for nice saved images

frame.copyTo(frameAnn);

cv::aruco::drawDetectedMarkers(frameAnn, markerCorners, markerIds);

}

//

cv::aruco::estimatePoseSingleMarkers(markerCorners,

arucoSqaureDimensions,

cam->cameraMatrix,

cam->distortionCoefficients,

rotationVectors,

translationVectors);

}

else

printf("# No markers found\n");

The functions 'cv::aruco::detectMarkers(...)' and 'cv::aruco::estimatePoseSingleMarkers(...)' has the functionality, but requires som data setup to function, as shown above.

All found markers are then saved and their position and orientation is converted to robot coordinates. Robot coordinates are defined as (x,y,z), where x is forward, y is left and z is up (plus orientation around the same coordinates). The reference position is the centre between the driving wheels at ground level.

Each marker is updated with this code

ArUcoVal * v = getID(i);

if (v != NULL)

{ // save marker info and do coordinate conversion for marker

v->lock.lock();

v->markerId = i;

// set time and frame-number for this detection

v->imageTime = imTime;

v->frameNumber = frameNumber;

// v->frame = frame; // might use frame.copyTo(v->frame) insted

v->rVec = rotationVectors[j];

v->tVec = translationVectors[j];

//

if (debugImages)

{ // show detected orientation in image X:red, Y:green, Z:blue.

cv::aruco::drawAxis(frameAnn, cam->cameraMatrix, cam->distortionCoefficients, v->rVec, v->tVec, 0.03f); //X:red, Y:green, Z:blue.

}

//

v->markerToRobotCoordinate(cam->cam2robot);

v->isNew = true;

v->lock.unlock();

The coordinate conversion is in the 'markerToRobotCoordinate(cv::Mat cam2robot)' function.

This function also determines if the marker is vertical or horizontal, and estimates based on this the orientation as one angle around the robot z-axis (up). This can then be used as a destination pose, using the x,y from the marker position and heading (called 'markerAngle' )

The values for the marker is protected by a resource lock, as it is generated and used by different threads.

Installation

Get software

Get the ROBOBOT software from the svn repository:

svn checkout svn://repos.gbar.dtu.dk/jcan/regbot/mission mission

or just update if there already

svn up

Compile

To be able to compile the demo software CMAKE needs also to use the user installed library (raspicam installed above), so add the following line to ~/.bashrc:

export CMAKE_PREFIX_PATH=/usr/local/lib

Then build Makefiles and compile:

cd ~/mission mkdir -p build cd build cmake .. make -j3

Then test-run the application:

./mission

It should print that the camera is open and the bridge is connected to the REGBOT hardware.

Sound

The robot has small speakers

Music

A small class is available to play (and stop playing) music files.

The class is called 'UPlay' in the file uplay.h (header file only)

The following code illustrate its use:

UPlay play;

play.setFile("../The_thing_goes_Bassim.mp3");

play.setVolume(5); // % (0..100)

play.start();

...

play.stop();

In this case it is an mp3 file, but some other types should be playable as well.

Speak

system("espeak \"bettina reached point 3\" -ven+f4 -a30 -s130");

This line makes the robot say "bettina reached point 3" the parameters "-a30" turns amplitude down to 30%, "-ven+f4" sets language to english with female voice 4 and "-s130" makes the speech a little slower and easier to understand. It requires that espeak is installed (sudo apt install espeak).

To use on Raspberry pi, it is better to use

system("espeak \"Mission paused.\" -ven+f4 -s130 -a60 2>/dev/null &");

The "2>/dev/null" tell that error messages should be dumped, and the final "&" say that it should run in the background (not to pause the mission).

Software documentation (doxygen)

Figure: generated with doxygen http://aut.elektro.dtu.dk/robobot/doc/html/classes.html

The classes that inherit from UData are classes that makes data available for the mission, e.g. joystick buttons (in UJoy) event flags (in UEvent) or IR distance data (in UIRdist).

The classes that inherit from URun has a thread running to receive data from an external source, e.g. UBridge that handles communication with the ROBOBOT_BRIDGE.

The camera class (UCamera) is intended to do the image processing.

HTML documentation - Doxygen

To generate doxygen html files go to mission directory and run doxygen.

cd ~/mission doxygen Doxyfile

then open the index.html with a browser.

If doxygen is not installed, then install using

sudo apt install doxygen