Mission template software

(→Software structure) |

|||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

This is an example software in C++ to access both raspberry camera and REGBOT, and with an example mission controlled from the raspberry. | This is an example software in C++ to access both raspberry camera and REGBOT, and with an example mission controlled from the raspberry. | ||

| + | |||

| + | ====Get software==== | ||

Get the ROBOBOT software from the svn repository: | Get the ROBOBOT software from the svn repository: | ||

| Line 10: | Line 12: | ||

svn up | svn up | ||

| + | |||

| + | ====Compile==== | ||

To be able to compile the demo software CMAKE needs also to use the user installed library (raspicam installed above), | To be able to compile the demo software CMAKE needs also to use the user installed library (raspicam installed above), | ||

| Line 30: | Line 34: | ||

It should print that the camera is open and connected to the robot (bidge). | It should print that the camera is open and connected to the robot (bidge). | ||

| − | + | ===Software structure=== | |

| − | The | + | The sound system can be used for debugging, e.g. add a C++ line like: |

| − | + | system("espeak \"bettina reached point 3\" -ven+f4 -a30 -s130"); | |

| − | + | This line makes the robot say "bettina reached point 3" the parameters "-a30" turns amplitude down to 30%, "-ven+f4" sets language to english with female voice 4 and "-s130" makes the speech a little slower and easier to understand. | |

| + | It requires that espeak is installed (sudo apt install espeak). | ||

| − | + | To use on Raspberry pi, it is better to use | |

| − | + | system("espeak \"Mission paused.\" -ven+f4 -s130 -a60 2>/dev/null &"); | |

| − | + | ||

| − | + | The "2>/dev/null" tell that error messages should be dumped, and the final "&" say that it should run in the background (not to pause the mission). | |

| − | + | ===AruCo Marker=== | |

| − | + | The camera class contains some functions to detect Aruco markers. | |

| + | It it described in more details on [[AruCo Markers]]. | ||

| − | + | ===Software documentation (doxygen)=== | |

| − | + | ||

| + | [[File:inherit_graph_2.png | 200px]] | ||

| + | |||

| + | Figure: generated with doxygen http://aut.elektro.dtu.dk/robobot/doc/html/classes.html | ||

| + | |||

| + | |||

| + | The classes that inherit from UData are classes that makes data available for the mission, e.g. joystick buttons (in UJoy) event flags (in UEvent) or IR distance data (in UIRdist). | ||

| + | |||

| + | The classes that inherit from URun has a thread running to receive data from an external source, e.g. UBridge that handles communication with the ROBOBOT_BRIDGE. | ||

| + | |||

| + | The camera class (UCamera) is intended to do the image processing. | ||

| + | |||

| + | ====HTML documentation - Doxygen==== | ||

| + | |||

| + | To generate doxygen html files go to mission directory and run doxygen. | ||

| + | |||

| + | cd ~/mission | ||

| + | doxygen Doxyfile | ||

| + | |||

| + | then open the index.html with a browser. | ||

| − | + | If doxygen is not installed, then install using | |

| − | + | sudo apt install doxygen | |

Latest revision as of 12:47, 12 January 2020

Contents |

[edit] ROBOBOT mission demo C++

This is an example software in C++ to access both raspberry camera and REGBOT, and with an example mission controlled from the raspberry.

[edit] Get software

Get the ROBOBOT software from the svn repository:

svn checkout svn://repos.gbar.dtu.dk/jcan/regbot/mission mission

or just update if there already

svn up

[edit] Compile

To be able to compile the demo software CMAKE needs also to use the user installed library (raspicam installed above), so add the following line to ~/.bashrc:

export CMAKE_PREFIX_PATH=/usr/local/lib

Then build Makefiles and compile:

cd ~/mission mkdir -p build cd build cmake .. make

Then test-run the application:

./mission

It should print that the camera is open and connected to the robot (bidge).

[edit] Software structure

The sound system can be used for debugging, e.g. add a C++ line like:

system("espeak \"bettina reached point 3\" -ven+f4 -a30 -s130");

This line makes the robot say "bettina reached point 3" the parameters "-a30" turns amplitude down to 30%, "-ven+f4" sets language to english with female voice 4 and "-s130" makes the speech a little slower and easier to understand. It requires that espeak is installed (sudo apt install espeak).

To use on Raspberry pi, it is better to use

system("espeak \"Mission paused.\" -ven+f4 -s130 -a60 2>/dev/null &");

The "2>/dev/null" tell that error messages should be dumped, and the final "&" say that it should run in the background (not to pause the mission).

[edit] AruCo Marker

The camera class contains some functions to detect Aruco markers. It it described in more details on AruCo Markers.

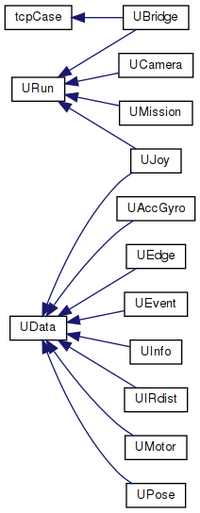

[edit] Software documentation (doxygen)

Figure: generated with doxygen http://aut.elektro.dtu.dk/robobot/doc/html/classes.html

The classes that inherit from UData are classes that makes data available for the mission, e.g. joystick buttons (in UJoy) event flags (in UEvent) or IR distance data (in UIRdist).

The classes that inherit from URun has a thread running to receive data from an external source, e.g. UBridge that handles communication with the ROBOBOT_BRIDGE.

The camera class (UCamera) is intended to do the image processing.

[edit] HTML documentation - Doxygen

To generate doxygen html files go to mission directory and run doxygen.

cd ~/mission doxygen Doxyfile

then open the index.html with a browser.

If doxygen is not installed, then install using

sudo apt install doxygen